Disney Research and Swiss Federal Institute of Technology in Zurich have developed a system that can transform stereoscopic content into multi-view content in real-time.

The existing 3D stereoscopic content, which simultaneously projects several views of a scene, will not work in commercially available ‘glasses-less’ 3D displays as of now.

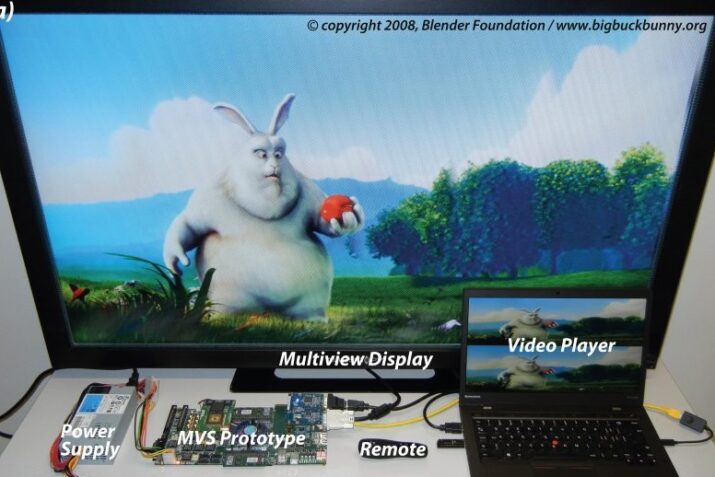

Michael Schaffner and his colleagues describe the combination of computer algorithms and hardware that can convert existing stereoscopic 3D content so that it can be used in the glasses-less displays, called multi-view autostereoscopic displays, or MADs.

Not only does the process operate automatically and in real-time, but the hardware could be integrated into a system-on-chip (SoC), making it suitable for mobile applications.

“The full potential of this new 3D technology won’t be achieved simply by eliminating the need for glasses,” said Markus Gross, vice president of research at Disney Research.

“We also need content, which is largely non-existent in this new format and often impractical to transmit, even when it does exist. It’s critical that the systems necessary for generating that content be so efficient and so mobile that they can be used in any device, anywhere,” Gross added.

MADs enable a 3D experience by simultaneously projecting several views of a scene, rather than just the two views of conventional, stereoscopic 3D content.

Researchers, therefore, have begun to develop a number of multi-view synthesis (MVS) methods to bridge this gap.

The team took an approach based on image domain warping, or IDW. The researchers were able to use this method to synthesise eight views in real-time and in high definition.

The results were presented at the IEEE International Conference on Visual Communications and Image Processing, which was held in Singapore from December 13 to 16.